As many of the regular readers of this blog know, I largely use R for most of my data work. There have been a few occasions when I’ve tried to use Python, but have found that I’m far less efficient in that than I am with R, and so abandoned it, despite the relative ease of putting things into production.

Now in my company, like in most companies, people use both Python and R (the team that reports to me largely uses R, everyone else largely uses Python). And while till recently I used to claim that I’m multilingual in the sense that I can read Python code fairly competently, of late I’m not sure I am. I find it increasingly difficult to parse and read production grade python code.

And now, after some experiments with ChatGPT, and exploring other people’s codes, I have an idea on why I’m finding it hard to read production-grade Python code. It has to do with “code density”.

Of late I’ve been experimenting with Spark (finally, in this job I do a lot of “big data” work – something I never had to in my consulting career prior to this). Related to this, I was reading someone’s PySpark code.

And then it hit me – the problem (rather, my problem) with Python is that it is far more verbose than R. The number of characters or lines of code required to do something in Python is far more than what you need in R (especially if you are using the tidyverse family of packages, which I do, including sparklyr for spark).

Why does the density of code matter? It is to do with aesthetics and modularity and ease of understanding.

Yesterday I was writing some code that I plan to put into production. After a few hours of coding, I looked at the code and felt disgusted with myself – it was a way too long monolithic block of code. It might have been good when I was writing it, but I knew that if I were to revisit it in a week or two, I wouldn’t be able to understand what the hell was happening there.

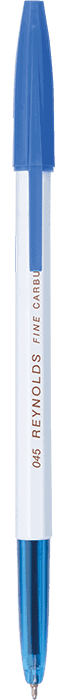

I’ve never worked as a professional software engineer, but with the amount of coding I’ve done, I’ve worked out what is a “reasonable length for a code block”. It’s like that apocryphal story of Indian public examiners for high school exams who evaluate history answers based on how long they are – “if they were to place an ordinary Reynolds 045 pen vertically on the sheet, the answer should be longer than that for the student to get five marks”.

It’s the reverse here. Approximately speaking, if you were to place a Reynolds pen vertically on screen (at your normal font size), no function (or code block) can be longer than the pen.

This easily approximates how much the eye can see on one normal Macbook screen (I use a massive external monitor at work, and a less massive, but equally wide, one at home). If you have to keep scrolling up and down to understand the full logic, there is a higher chance you might make mistakes, and higher difficulty for someone to understand the code.

Till recently (as in earlier this week) I would crib like crazy that people coding in Python would make their code “too modular”. That I would have to keep switching back and forth between different functions (and classes!!) to make sense of some logic, and about how that would make codes hard to debug (I still think there is a limit to how modular you can make your ETL code).

Now, however (I’m writing this on a Saturday – I’m not working today), from the code density perspective, it all makes sense to me.

The advantage of R is that because the code is far denser, you can pack in a far greater amount of logic in a Reynolds pen length of code. So over the years I’ve gotten used to having this much logic being presented to me in one chunk (without having to scroll or switch functions).

The relatively lower density of Python, however, means that the same amount of logic that would be one function in R is now split across several different functions. It is not that the writer of the code is “making this too modular” or “writing functions just for the heck of it”. It is just that their “mental Reynolds pens” doesn’t allow them to pack in more lines in a chunk or function, and Python’s density means there is only so much logic that can go in there.

As part of my undergrad, I did a course on Software Engineering (and the one thing the professor told us then was that we should NOT take up software engineering as a career – “it’s a boring job”, he had said). In that, one of the things we learnt was that in conventional software services contexts, billing would happen as a (nonlinear) function of “kilo lines of code” (this was in early 2003).

Now, looking back, one thing I can say is that the rate per kilo line of R code ought to be much higher than the rate per kilo line of Python code.

Cross posted on my now-largely-dormant Art of Data Science newsletter