David Henderson at Econlog quotes a doctor on a rather interesting and important point, regarding Bayesian priors. He writes:

Later, when I went to see his partner, my regular doctor, to discuss something else, I mentioned that incident. He smiled and said that one of the most important lessons he learned from one of his teachers in medical school was:

When you hear hooves, think horses, not zebras.

This was after he had some symptoms that are correlated with heart attack and panicked and called his doctor, got treated for gas trouble and was absolutely fine after that.

Our problem is that when we have symptoms that are correlated with something bad, we immediately assume that it’s the bad thing that has happened, and panic. In that process we don’t consider alternate reasonings, and then do a Bayesian analysis.

Let me illustrate with a personal example. Back when I was a schoolboy, and I wouldn’t return home from school at the right time, my mother would panic. This was the time before cellphones, remember, and she would just assume that “the worst” had happened and that I was in trouble somewhere. Calls would go to my father’s office, and he would ask her to wait, though to my credit I was never so late that they had to take any further action.

Now, coming home late from school can happen due to a variety of reasons. Let us eliminate reasons such as wanting to play basketball for a while before returning – since such activities were “usual” and been budgeted for. So let’s assume that there are two possible reasons I’m late – the first is that I had gotten into trouble – I had either been knocked down on my way home or gotten kidnapped. The second is that the BTS (Bangalore Transport Service, as it was then called) schedule had gone completely awry, thanks to which I had missed my usual set of buses, and was thus delayed. Note that me not turning up at home until a certain point of time was a symptom of both of these.

Having noticed such a symptom, my mother would automatically come to the “worst case” conclusion (that I had been knocked down or kidnapped), and panic. But then I’m not sure that was the more rational reaction. What she should have done was to do a Bayesian analysis and use that to guide her panic.

Let A be the event that I’d been knocked over or kidnapped, and B be the event that the bus schedule had gone awry. Let L(t) be the event that I haven’t gotten home till time t, and that such an event has been “observed”. The question is that, with L(t) having been observed, what are the odds of A and B having happened? Bayes Theorem gives us an answer. The equation is rather simple:

P(A | L(t) ) = P(A).P(L(t)|A) / (P(A).P(L(t)|A) + P(B).P(L(t)|B) )

is just one minus the above quantity (we assume that there is nothing else that can cause L(t)) .

is just one minus the above quantity (we assume that there is nothing else that can cause L(t)) .

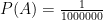

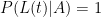

So now let us give values. I’m too lazy to find the data now, but let’s say we find from the national crime data that the odds of a fifteen-year-old boy being in an accident or kidnapped on a given day is one in a million. And if that happens, then L(t) obviously gets observed. So we have

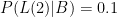

The BTS was notorious back in the day for its delayed and messed up schedules. So let us assume that P(B) is  . Now,

. Now,  is tricky, and the reason the (t) qualifier has been added to L. The larger t is, the smaller the value of L(t)|B. If there is a bus schedule breakdown, there is probably a 50% probability that I’m not home an hour after “usual”. But there is only a 10% probability that I’m not home two hours after “usual” because a bus breakdown happened. So

is tricky, and the reason the (t) qualifier has been added to L. The larger t is, the smaller the value of L(t)|B. If there is a bus schedule breakdown, there is probably a 50% probability that I’m not home an hour after “usual”. But there is only a 10% probability that I’m not home two hours after “usual” because a bus breakdown happened. So

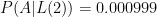

Now let’s plug in and based on how delayed I was, find the odds that I was knocked down/kidnapped. If I were late by an hour,

or  . In other words, if I didn’t get home an hour later than usual, the odds that I had been knocked down or kidnapped was just one in five thousand!

. In other words, if I didn’t get home an hour later than usual, the odds that I had been knocked down or kidnapped was just one in five thousand!

What if I didn’t come home two hours after my normal time? Again we can plug into the formula, and here we find that  or one in a thousand! So notice that the later I am, the higher the odds that I’m in trouble. Yet, the numbers (admittedly based on the handwaving assumptions above) are small enough for us to not worry!

or one in a thousand! So notice that the later I am, the higher the odds that I’m in trouble. Yet, the numbers (admittedly based on the handwaving assumptions above) are small enough for us to not worry!

Bayesian reasoning has its implications elsewhere, too. There is the medical case, as Henderson’s blogpost illustrates. Then we can use this to determine whether a wrong act was due to stupidity or due to malice. And so forth.

But what Henderson’s doctor told him is truly an immortal line:

When you hear hooves, think horses, not zebras.